The art of Javascript Generators

Introduction

What are Generators

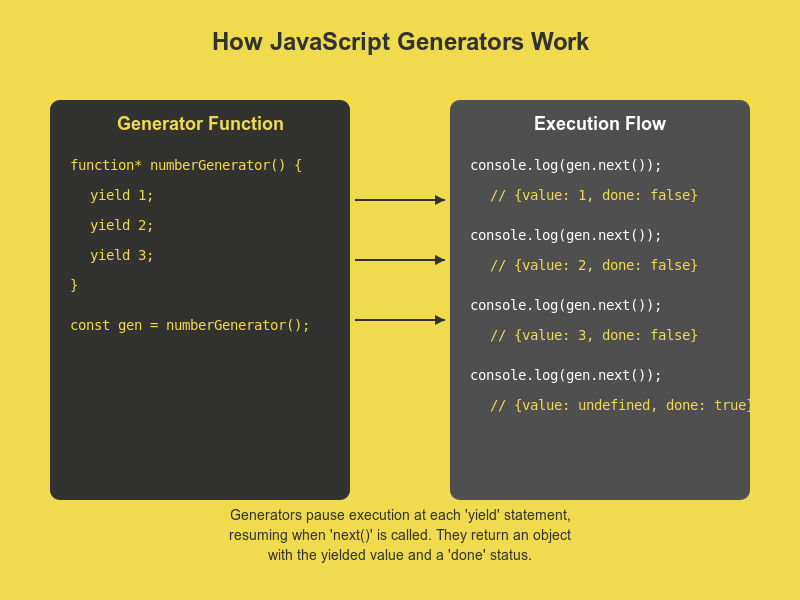

JavaScript generators are a special type of function that can be paused and resumed, making them useful for handling complex tasks. Unlike normal functions, which run until completion, generators allow you to control when and how much of the function executes at a time. They are created using the function* syntax.

The key feature of generators is the yield keyword, which is used to return a value and pause the function’s execution. When you call the generator again, it resumes from where it left off, continuing until the next yield statement or the end of the function.

Generators also work as iterators, meaning they can be used in loops or with .next() calls to retrieve values step by step. Each time you invoke .next(), it provides the next value and pauses again.

A common use case for generators is managing large datasets or infinite sequences, as they allow you to process data incrementally, which saves memory. Another popular use is in asynchronous programming, where generators can make code flow more readable by pausing at certain steps.

Here's a quick example of a generator:

function* numberGenerator() {

yield 1;

yield 2;

yield 3;

}

const gen = numberGenerator();

console.log(gen.next().value); // 1

console.log(gen.next().value); // 2

console.log(gen.next().value); // 3

generators-js

Frontend Use Case: Infinite Scrolling in React

import React, { useState, useEffect } from "react";

function* dataFetcher() {

let page = 1;

while (true) {

yield fetch(`https://api.example.com/data?page=${page}`).then((response) =>

response.json()

);

page++;

}

}

function InfiniteScrollList() {

const [items, setItems] = useState([]);

const [fetcher, setFetcher] = useState(null);

useEffect(() => {

setFetcher(dataFetcher());

}, []);

const loadMore = async () => {

if (fetcher) {

const { value } = await fetcher.next();

const newItems = await value;

setItems((prevItems) => [...prevItems, ...newItems]);

}

};

return (

<div>

<ul>

{items.map((item) => (

<li key={item.id}>{item.name}</li>

))}

</ul>

<button onClick={loadMore}>Load More</button>

</div>

);

}

export default InfiniteScrollList;Backend Use Case: Streaming Large Datasets with Node.js

const { MongoClient } = require("mongodb");

async function* largeDatasetStreamer(collection, batchSize = 1000) {

let skip = 0;

while (true) {

const batch = await collection.find().skip(skip).limit(batchSize).toArray();

if (batch.length === 0) break;

yield batch;

skip += batchSize;

}

}

async function processLargeDataset() {

const client = new MongoClient("mongodb://localhost:27017");

await client.connect();

const db = client.db("mydatabase");

const collection = db.collection("largedata");

const streamer = largeDatasetStreamer(collection);

for await (const batch of streamer) {

for (const item of batch) {

// Process each item

console.log(item);

}

}

await client.close();

}

processLargeDataset().catch(console.error);